HttpClient获取响应内容类型Content-Type

发布时间:『 2017-01-19 18:04』 博客类别:httpclient

HttpClient获取响应内容类型Content-Type

响应的网页内容都有类型也就是Content-Type

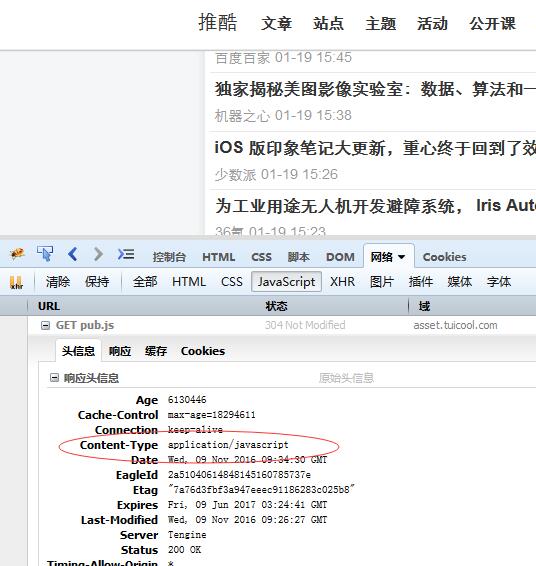

通过火狐firebug,我们看响应头信息:

当然我们可以通过HttpClient接口来获取;

HttpEntity的getContentType().getValue() 就能获取到响应类型;

package com.open1111.httpclient.chap02;

import org.apache.http.HttpEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

public class Demo2 {

public static void main(String[] args) throws Exception{

CloseableHttpClient httpClient=HttpClients.createDefault(); // 创建httpClient实例

HttpGet httpGet=new HttpGet("http://www.java1234.com"); // 创建httpget实例

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:50.0) Gecko/20100101 Firefox/50.0"); // 设置请求头消息User-Agent

CloseableHttpResponse response=httpClient.execute(httpGet); // 执行http get请求

HttpEntity entity=response.getEntity(); // 获取返回实体

System.out.println("Content-Type:"+entity.getContentType().getValue());

//System.out.println("网页内容:"+EntityUtils.toString(entity, "utf-8")); // 获取网页内容

response.close(); // response关闭

httpClient.close(); // httpClient关闭

}

}运行输出:

Content-Type:text/html

一般网页是text/html当然有些是带编码的,

比如请求www.tuicool.com:输出:

Content-Type:text/html; charset=utf-8

假如请求js文件,比如 http://www.java1234.com/static/js/jQuery.js

运行输出:

Content-Type:application/javascript

假如请求的是文件,比如 http://central.maven.org/maven2/HTTPClient/HTTPClient/0.3-3/HTTPClient-0.3-3.jar

运行输出:

Content-Type:application/java-archive

当然Content-Type还有一堆,那这东西对于我们爬虫有啥用的,我们再爬取网页的时候 ,可以通过

Content-Type来提取我们需要爬取的网页或者是爬取的时候,需要过滤掉的一些网页;

关键字:

httpClient

Content-Type

Java1234_小锋

(知识改变命运,技术改变世界)

- Java核心基础(145)

- Mysql(2)

- Docker(35)

- Dubbo(7)

- 007项目(0)

- SVN(22)

- QQ第三方登录(6)

- mybatis-plus(20)

- Mycat(30)

- Layui(2)

- 微信扫码登录(4)

- Git(50)

- SpringCloud(33)

- Tomcat(6)

- 支付宝接口(3)

- NodeJs(1)

- IDEA(24)

- SpringBoot(11)

- Nginx(24)

- Vue.js(50)

- jsoup(6)

- shiro(1)

- hibernate(1)

- EhCache缓存框架(4)

- webservice(10)

- CAS单点登录(7)

- elasticsearch(31)

- Redis(17)

- maven(6)

- 活动(20)

- centos(25)

- log4j日志(8)

- IT之路(26)

- activiti(26)

- 随心生活(19)

- java爬虫技术(14)

- 网站SEO(2)

- httpclient(7)

- htmlunit(10)

- 2026年01月(1)

- 2021年10月(1)

- 2021年02月(3)

- 2020年11月(3)

- 2020年10月(4)

- 2020年09月(7)

- 2020年08月(18)

- 2020年07月(21)

- 2020年06月(37)

- 2020年05月(17)

- 2020年04月(12)

- 2020年03月(10)

- 2020年02月(14)

- 2020年01月(12)

- 2019年12月(15)

- 2019年11月(27)

- 2019年10月(5)

- 2019年09月(1)

- 2019年08月(4)

- 2019年07月(28)

- 2019年06月(16)

- 2019年05月(4)

- 2019年04月(3)

- 2019年03月(2)

- 2019年02月(7)

- 2019年01月(20)

- 2018年12月(2)

- 2018年11月(5)

- 2018年10月(30)

- 2018年09月(11)

- 2018年08月(5)

- 2018年07月(9)

- 2018年06月(4)

- 2018年05月(4)

- 2018年04月(3)

- 2018年03月(7)

- 2018年02月(6)

- 2018年01月(13)

- 2017年12月(3)

- 2017年11月(10)

- 2017年10月(1)

- 2017年09月(9)

- 2017年08月(12)

- 2017年07月(19)

- 2017年06月(21)

- 2017年05月(1)

- 2017年04月(12)

- 2017年03月(13)

- 2017年02月(12)

- 2017年01月(14)

- 2016年12月(8)

- 2016年11月(25)

- 2016年10月(16)

- 2016年09月(13)

- 2016年08月(20)

- 2016年07月(12)

- 2016年06月(36)

- 2016年05月(10)

- 2016年04月(19)

- 2016年03月(14)

- 2016年02月(23)

- 2016年01月(1)

Powered by Java1234 V3.0

Copyright © 2012-2016 Java知识分享网 版权所有